With the ever-increasing number of digital photos and videos being recorded these days, many of us have gained experience in framing and shooting media. However, the jump to immersive 360-degree media brings a whole new set of possibilities, challenges and things to keep in mind.

Everything is in the shot

First and foremost, there is no “behind the camera.” When shooting any scene, you need to either leave the room or make yourself part of the shot. Most of the cameras that are available today work with a companion phone app that allows you to remotely take a photo or video, giving you the opportunity to hide close by, out of sight. Recently, I did a shoot of the front of our office and used a pole in the front of the building to remove myself from the scene.

Limit movement of the camera

When planning your shoot, try to let the person viewing the experience be in charge of where they go. Use hotspots to allow for “teleporting” around an area or between scenes in a “first-person” perspective. Any disconnect between the camera’s movements and the viewer’s own body movements can be disorienting at best, and nauseating at worst. I think we’ve all seen enough YouTube videos of grandma riding a roller coaster in VR to know that camera movement can be problematic in an immersive situation.

While you may not realize it, another item that causes disorientation is an off-level horizon. For a standard photo, adding an angle to the shot can add a nice effect, but in VR, you’ll end up with a person having to tilt their head to the side in order to maintain their balance. Make sure your camera is level while you are shooting.

Camera Positioning

Framing a subject in a standard photo is simple: look at the subject in the screen or viewfinder, adjust your distance or zoom, and you are good to go. With a 360 camera, you generally don’t have the luxury of a viewfinder, and if you do have the image on the screen of your phone, it’s hard to really know how the final image will look because the spherical image is stretched flat and rectangular on the screen.

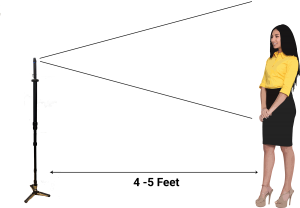

In general, the camera should be about 4-5 feet from the main subject, as this should give it enough prominence in the shot without making it uncomfortably close. That same experience you get when someone is “in your personal space” is easy to achieve, and generally not what you want in VR. Conversely, objects that are too far away from the camera fade out of view quickly, so if detail is important, it needs to be close.

Equally important, the camera should be slightly below eye level when the subject is being filmed. In VR, a level or slightly raised view is a comfortable position for the viewer, whereas having the viewer look at a high angle up or down can be very disorienting and cause someone to lose their balance.

Be aware of how stitch lines will be placed in the final shot. If your camera has two fisheye lenses, objects that lie along the “prime meridian” between the two fisheye lenses can be subject to distortion from the stitching process, so you want to limit the movement that is visible to the camera in these areas.

Finally, even though it is capturing a full 360 degrees, the front of the camera is important. It is what will be the initial view that a person sees when they enter a scene and should be the focal point of your image or video.

Lighting

Quite often, for standard photography, you will use additional lighting or a flash to illuminate an area that doesn’t have good natural light. With 360, you can’t add extra visible lights because they will also be in the scene, and you’ll end up blinding anyone who looks back at the lights around them.

The best solution, of course, is to have good natural lighting in the scene, but that’s not always possible. If there is a particular area that must be well-lit, try using extra lighting behind other objects in the scene. Or, try using small directional spotlights pointing towards the subject and away from the camera. Also, consider the existing lights in the room or area, and perhaps look at using higher power fixtures that give you the lighting effect you need.

Resolution

Always capture your source material in 4K resolution or higher. Remember that 4K resolution is split between multiple lenses and spread over a full 360 degrees, so 4K images and video only give you HD in each hemisphere. If the resulting video is too large or requires too much bandwidth (see the compression section below!), you can always lower the resolution to HD if the subject matter does not need it, but you can’t go the other way.

After the shot

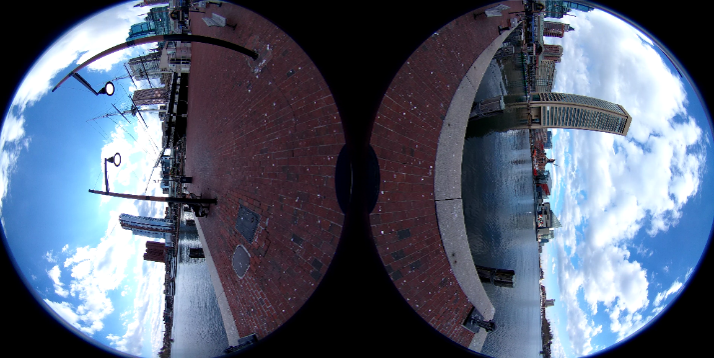

One thing that most new 360 videographers do not realize is that unlike 360 images, the raw video that comes off the camera is not yet ready to be used. When the initial video is taken off the camera, the video looks like this:

Each frame of the video consists of two fisheye views from in front of and behind the camera. The video then needs to be run through dedicated stitching software that comes with the camera which matches the edges of the fisheye view together to form a sphere. When that process is done, which can take quite a while, you get an "equirectangular" image that looks like this:

Equirectangular is the actual format used for video and images, and it’s a big word that just means that it represents a full sphere mapped onto a rectangular surface. This is what gives the stretched appearance on the top and bottom of the image. 360 images taken by the camera are a single frame, so they are not as processor intensive to map to an equirectangular format and can be stitched on the actual device. Video, at 30 frames per second, requires a bit more horsepower than an average camera does and therefore requires the companion program to manage the stitching process.

Video Compression

Compression is one of the most critical steps in producing VR content. 4K video can generate incredibly large files that are hard to work with on your local machine. Plus, due to file size, 4K video is also much more difficult to stream it over the internet or transfer to a phone.

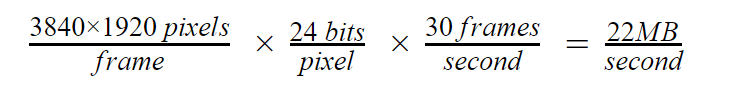

Let’s consider the case of uncompressed 4K video. To be considered 4K, each frame should be a minimum of 3840 x 1920 pixels. Each pixel requires 24 bits to represent its color, and for 30 frames a second, you would get:

At 22 MB per second, a one minute video would be over 1.3GB in size. This would be an unusable amount of data generated from the camera for most people, and certainly not something that could be used on the internet.

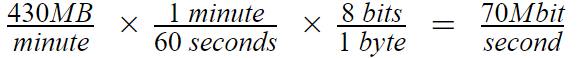

Fortunately, the cameras do some compression natively before storing it. For example, a Ricoh Theta V produces approximately 430MB of 4K video for each minute recorded, which is about one-third of the size of the raw footage.

This is helpful for manipulating the files themselves, but it is still a very large file. Consider the bandwidth that would be required to view this file over the internet, keeping in mind:

So, to stream that video smoothly, it would require a dedicated 70Mbit connection or a whole lot of time to download it. This is not something that can be done in most situations.

The answer to the problem is video compression. If you use a product like Adobe Premiere to manage cropping and editing of the video, it can also do a great job of compressing it. Experiment a bit with high, medium, and adaptive bit rates to see if you can achieve a good balance between file size and quality. You can generally get to a file that is less than one-tenth of the size of the file with little perceptible difference in the quality. There are also free options available to handle video compression that work very well. Give Handbrake a try: http://handbrake.fr.

START PRACTICING WITH 360 VIDEO

As you can see, there is a bit more to 360 filming that there is with standard filming. But, as with just about anything, the most important lessons learned are those learned by doing, so get out there and start shooting today!